Reinforcement learning (RL) is a powerful machine learning paradigm that has seen significant advancements in recent years.

In this extensive article, we will dive deep into the fundamentals of reinforcement learning, introduce the Q-learning algorithm, and explore various real-world RL applications, particularly in game AI.

Along the way, we’ll provide programming code samples, fascinating examples, facts, figures, and even some smileys 😄 to keep you engaged!

Table of Contents

- Reinforcement Learning Fundamentals

- Q-Learning: A Popular Reinforcement Learning Algorithm

- Real-World Reinforcement Learning Applications

- Game AI and Reinforcement Learning

- Building Reinforcement Learning Models

Reinforcement Learning Fundamentals

What is Reinforcement Learning?

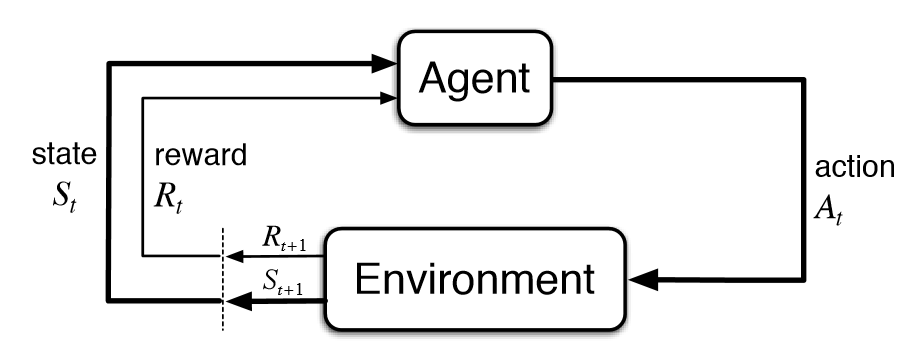

Reinforcement learning (RL) is a type of machine learning technique where an agent learns to make decisions by interacting with an environment.

In RL, an agent seeks to find the best actions to take in a given situation to maximize a cumulative reward. The agent learns through trial and error, adjusting its strategy based on feedback received from the environment.

Key Components of Reinforcement Learning

There are four key components in any RL system:

- Agent: The entity that makes decisions and takes actions.

- Environment: The world in which the agent operates.

- State: A snapshot of the environment at a given time.

- Action: An operation performed by the agent on the environment.

The RL Process

The RL process typically involves the following steps:

- The agent observes the current state of the environment.

- The agent selects an action based on its current policy.

- The action is executed, changing the environment’s state.

- The agent receives a reward from the environment.

- The agent updates its policy based on the reward.

This process is repeated until the agent converges on an optimal policy.

Q-Learning: A Popular Reinforcement Learning Algorithm

What is Q-Learning?

Q-learning is a widely used model-free RL algorithm for value-based learning. It enables an agent to learn the optimal action-value function, which is a measure of the expected cumulative reward when taking a specific action in a given state.

Q-Learning Algorithm

The Q-learning algorithm consists of the following steps:

- Initialize the Q-table with arbitrary values.

- Observe the current state of the environment (s).

- Choose an action (a) based on the current state using an exploration-exploitation strategy, such as the epsilon-greedy method.

- Perform the chosen action and observe the new state (s’) and reward (r).

- Update the Q-table using the Bellman equation: Q(s, a) = Q(s, a) + α * [r + γ * max_a’ Q(s’, a’) – Q(s, a)]

- Set the current state to the new state (s = s’).

- Repeat steps 2-6 until the learning process converges or a stopping criterion is met.

Here, α (alpha) is the learning rate, and γ (gamma) is the discount factor.

Python Code Example

Below is a simple Python code example illustrating Q-learning:

import numpy as np

class QLearning:

def __init__(self, states, actions, alpha=0.1, gamma=0.99, epsilon=0.1):

self.states = states

self.actions = actions

self.alpha = alpha

self.gamma = gamma

self.epsilon = epsilon

self.q_table = np.zeros((states, actions))

def choose_action(self, state):

if np.random.random() < self.epsilon:

return np.random.choice(self.actions)

else:

return np.argmax(self.q_table[state])

def update_q_table(self, state, action, reward, next_state):

current_q = self.q_table[state, action]

max_future_q = np.max(self.q_table[next_state])

new_q = current_q + self.alpha * (reward + self.gamma * max_future_q - current_q)

self.q_table[state, action] = new_q

Real-World Reinforcement Learning Applications

Robotics

Reinforcement learning has been widely used in robotics for tasks such as navigation, object manipulation, and locomotion.

For instance, OpenAI’s RoboSumo project demonstrates how RL can be used to teach robots to wrestle in a sumo-like environment 🤖.

Healthcare

In healthcare, RL has shown promise in personalized treatment planning, drug discovery, and medical diagnostics.

For example, RL has been used to optimize radiation therapy treatment plans for cancer patients, improving both the effectiveness and efficiency of treatments.

Finance

RL is used in finance for portfolio management, algorithmic trading, and credit risk assessment.

Companies like J.P. Morgan and BNP Paribas have employed RL-based algorithms for trading and risk management purposes, enhancing their decision-making processes 💹.

Game AI and Reinforcement Learning

Game AI Overview

Game AI refers to the use of artificial intelligence techniques to create intelligent agents that can play games, often at a level surpassing human capabilities.

Reinforcement learning is particularly suited for game AI due to its ability to learn optimal strategies through trial and error.

Reinforcement Learning in Game AI

Reinforcement learning has been successfully applied to various games, such as chess, Go, and poker. RL agents have achieved remarkable results, often defeating human world champions and setting new records.

Notable Game AI Achievements with RL

One of the most famous examples of RL in game AI is DeepMind’s AlphaGo, which became the first AI to defeat a human world champion in the game of Go 🏆.

Another notable example is OpenAI’s Dota 2-playing bot, OpenAI Five, which has defeated professional teams in the popular multiplayer online battle arena (MOBA) game.

Building Reinforcement Learning Models

Tools and Frameworks

Several tools and frameworks are available for implementing reinforcement learning models, including:

- TensorFlow: A popular open-source machine learning library developed by Google.

- PyTorch: An open-source machine learning library developed by Facebook’s AI Research lab (FAIR).

- OpenAI Gym: A toolkit for developing and comparing reinforcement learning algorithms.

- RLlib: A library for reinforcement learning built on top of the Ray distributed computing framework.

Tips for Successful RL Model Training

Here are some tips for successfully training reinforcement learning models:

- Choose the right exploration-exploitation strategy: Balancing exploration and exploitation is crucial in RL. Common strategies include epsilon-greedy, softmax action selection, and upper confidence bound (UCB).

- Use appropriate reward shaping: Designing a good reward function is vital for effective RL. Reward shaping involves adjusting the reward function to guide the agent towards better learning outcomes.

- Optimize hyperparameters: Tuning the learning rate, discount factor, and other hyperparameters can significantly impact the performance of RL algorithms. Utilize techniques like grid search, random search, or Bayesian optimization to find optimal hyperparameters.

- Employ parallelization and distributed computing: Reinforcement learning can be computationally expensive. Using parallelization and distributed computing can significantly speed up the learning process.

In conclusion, reinforcement learning is a powerful machine learning technique with a wide range of applications, from game AI to robotics and healthcare.

With the right tools, frameworks, and strategies, you can harness the power of RL to build intelligent agents capable of solving complex real-world problems.

So, why not dive into reinforcement learning today and explore the fascinating world of AI-driven decision-making? 😃

Thank you for reading our blog, we hope you found the information provided helpful and informative. We invite you to follow and share this blog with your colleagues and friends if you found it useful.

Share your thoughts and ideas in the comments below. To get in touch with us, please send an email to dataspaceconsulting@gmail.com or contactus@dataspacein.com.

You can also visit our website – DataspaceAI