Autoencoders and variational autoencoders are two powerful deep learning techniques that leverage the power of unsupervised learning for numerous applications.

In this article, we’ll dive into the world of autoencoders (AEs) and variational autoencoders (VAEs), providing detailed explanations, examples, and programming codes to help you understand their inner workings and potential applications.

Autoencoders: Uncovering Hidden Patterns

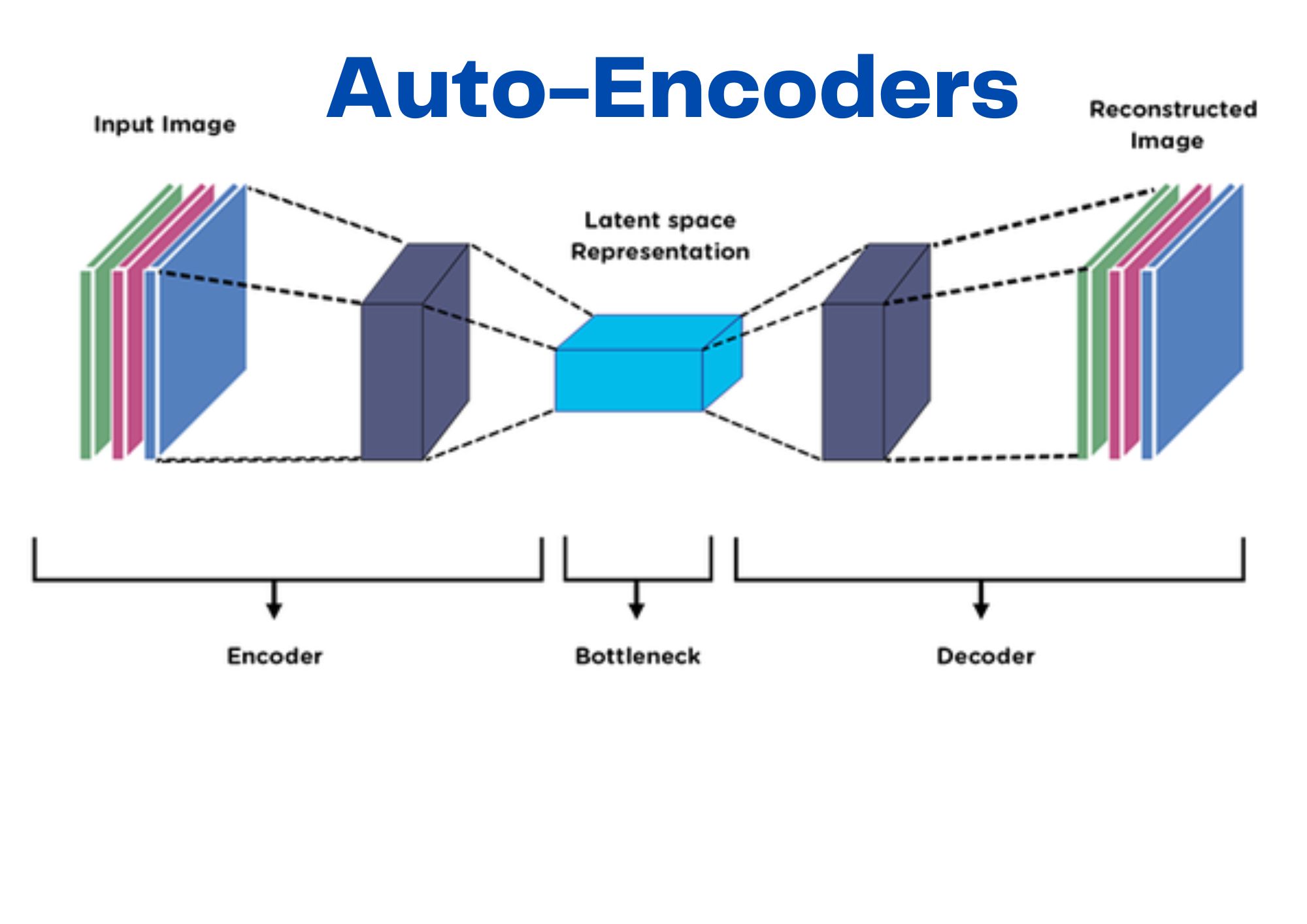

Autoencoders are a type of artificial neural network designed to learn efficient representations of data through unsupervised learning.

They consist of two main components: an encoder, which compresses the input data into a lower-dimensional representation, and a decoder, which reconstructs the input data from the compressed representation.

Autoencoder Architecture

The architecture of an autoencoder consists of an input layer, one or more hidden layers, and an output layer.

The encoder transforms the input data into a lower-dimensional representation, while the decoder reconstructs the original input from the compressed representation.

The objective is to minimize the difference between the input data and the reconstructed output, known as the reconstruction error.

Applications of Autoencoders

Autoencoders have found a wide range of applications, including:

- Image compression: Autoencoders can learn to compress images into a lower-dimensional representation and then reconstruct the image with minimal loss of information.

- Denoising: Autoencoders can learn to remove noise from images or other data, providing cleaner, higher-quality outputs.

- Anomaly detection: By learning a compressed representation of the data, autoencoders can help identify unusual or anomalous data points.

Variational Autoencoders: Adding Probabilistic Flavor

Variational autoencoders are a type of autoencoder that introduces a probabilistic twist to the encoding and decoding process.

They are particularly useful in scenarios where it’s essential to generate new samples from the learned data distribution.

Variational Autoencoder Architecture

The architecture of a variational autoencoder is similar to that of a traditional autoencoder, with one key difference: instead of learning a deterministic compressed representation, the VAE learns a distribution over the possible representations.

This is achieved by introducing a probabilistic layer in the encoder, which generates the mean and variance of the distribution.

Variational Autoencoder Example

Let’s consider a simple example of using a variational autoencoder for generating new images of handwritten digits.

The VAE would be trained on a dataset of handwritten digit images, such as the MNIST dataset. During the training process, the VAE learns a distribution over the possible representations of the digit images.

Once trained, we can sample new representations from this distribution and use the decoder to generate novel images of handwritten digits.

Programming Example: Building an Autoencoder with TensorFlow

Here’s a simple example of how to build a basic autoencoder using TensorFlow:

import tensorflow as tf

from tensorflow.keras.layers import Dense, Input

from tensorflow.keras.models import Model

# Define the autoencoder architecture

input_layer = Input(shape=(784,))

encoded = Dense(128, activation='relu')(input_layer)

decoded = Dense(784, activation='sigmoid')(encoded)

# Create the autoencoder model

autoencoder = Model(input_layer, decoded)

# Compile the model

autoencoder.compile(optimizer='adam', loss='binary_crossentropy')

# Load the MNIST dataset

mnist = tf.keras.datasets.mnist

(x_train, _), (x_test, _) = mnist.load_data()

# Normalize the data

x_train, x_test = x_train / 255.0, x_test / 255.0

# Flatten the data

x_train = x_train.reshape((len(x_train), 784))

x_test = x_test.reshape((len(x_test), 784))

# Train the autoencoder

autoencoder.fit(x_train, x_train, epochs=50, batch_size=256, shuffle=True, validation_data=(x_test, x_test))This code snippet demonstrates how to create a simple autoencoder using TensorFlow and train it on the MNIST dataset.

The autoencoder learns to compress the input images into a lower-dimensional representation and then reconstruct the images from the compressed representation.

Programming Example: Building a Variational Autoencoder with TensorFlow

Let’s now build a variational autoencoder using TensorFlow:

import tensorflow as tf

from tensorflow.keras.layers import Dense, Input, Lambda

from tensorflow.keras.models import Model

from tensorflow.keras.losses import binary_crossentropy

from tensorflow.keras import backend as K

# Define the sampling function

def sampling(args):

z_mean, z_log_var = args

batch = K.shape(z_mean)[0]

dim = K.int_shape(z_mean)[1]

epsilon = K.random_normal(shape=(batch, dim))

return z_mean + K.exp(0.5 * z_log_var) * epsilon

# Define the VAE architecture

input_layer = Input(shape=(784,))

encoded = Dense(128, activation='relu')(input_layer)

z_mean = Dense(64)(encoded)

z_log_var = Dense(64)(encoded)

z = Lambda(sampling, output_shape=(64,))([z_mean, z_log_var])

decoded = Dense(128, activation='relu')(z)

output_layer = Dense(784, activation='sigmoid')(decoded)

# Create the VAE model

vae = Model(input_layer, output_layer)

# Define the VAE loss function

def vae_loss(x, x_decoded):

xent_loss = binary_crossentropy(x, x_decoded)

kl_loss = -0.5 * K.mean(1 + z_log_var - K.square(z_mean) - K.exp(z_log_var), axis=-1)

return xent_loss + kl_loss

# Compile the model

vae.compile(optimizer='adam', loss=vae_loss)

# Load the MNIST dataset and preprocess it as before

# Train the VAE

vae.fit(x_train, x_train, epochs=50, batch_size=256, shuffle=True, validation_data=(x_test, x_test))This code snippet demonstrates how to create a variational autoencoder using TensorFlow and train it on the MNIST dataset.

The VAE learns a distribution over the possible representations of the images and can be used to generate new samples from this distribution.

Autoencoders and variational autoencoders are powerful unsupervised learning techniques that can be used for a wide range of applications, including image compression, denoising, and anomaly detection.

By leveraging these deep learning techniques, you can uncover hidden patterns in your data and generate novel samples from the learned data distribution.

So go ahead, unleash the power of unsupervised learning with autoencoders and variational autoencoders!

Thank you for reading our blog, we hope you found the information provided helpful and informative. We invite you to follow and share this blog with your colleagues and friends if you found it useful.

Share your thoughts and ideas in the comments below. To get in touch with us, please send an email to dataspaceconsulting@gmail.com or contactus@dataspacein.com.

You can also visit our website – DataspaceAI